This is the first in a four-part series covering serverless architecture, a variation of cloud computing now used by nearly 40%* of companies globally—from startups to major, established businesses.

Serverless Architecture Overview

The goal of this series is to introduce developers, engineers and technical business leaders to the opportunities of serverless architecture and how it may be used alone or in combination with other computing architectures to create the most efficient IT solutions for a variety of business applications.

We’re starting with an introduction, and in the next two articles we’ll drill down deeper into the components and features of serverless. Our final installment will present a case study in serverless architecture.

In his acclaimed best-selling book, motivational speaker Simon Sinek encourages business leaders to Start with Why. So, that’s where we’ll begin our introduction to serverless computing (“serverless” for short). The “why” most relevant to our discussion is the deficiency of server-based computing for many business applications and how serverless can fill the void.

What is Serverless Architecture?

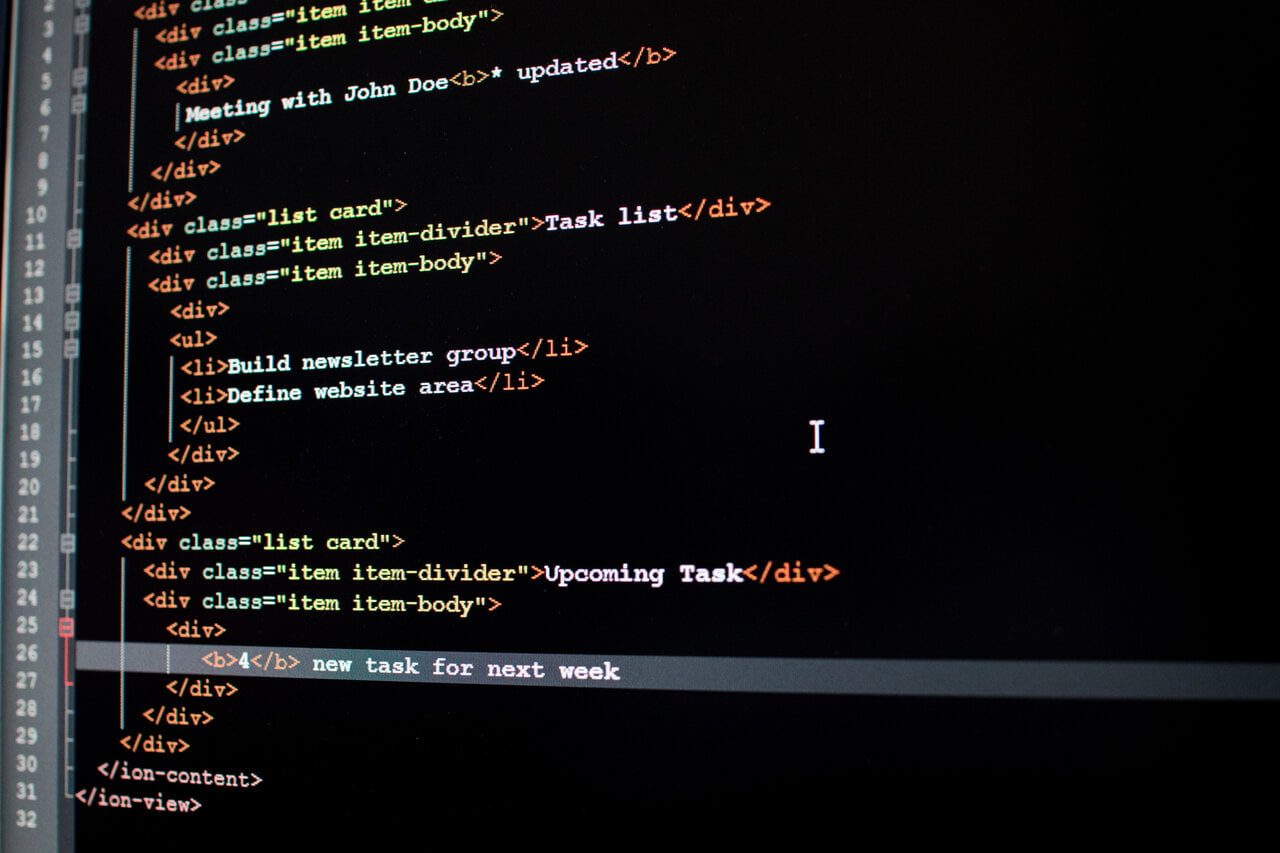

Serverless Architecture is a software design pattern where applications are developed and deployed in an environment where server management and capacity planning operations are abstracted away from the developer. This architecture allows developers to build and run applications and services without the necessity of managing infrastructure-related activities.

In a serverless architecture, the cloud provider dynamically manages the allocation and provisioning of servers. The servers are still present, but the management, scaling, patching, and administrative operations are handled automatically by the cloud provider. This allows developers to focus solely on writing code and delivering value to the business, thus improving operational efficiency and reducing time to market.

Aligning Server Capacity With Demand

In a traditional computing environment, organizations manage the applications they run on their servers, as well as for provisioning and managing their servers’ resources, uptime, maintenance and security updates. They’re also responsible for scaling server capacity up and down to align with demand. The latter is particularly significant because having “just enough” server capacity presents challenges in rapid growth environments and when volume spikes unexpectedly. Having too little server capacity can cause business operations to fail, with obvious dire consequences. Almost equally concerning, however, is having too much server capacity. Although extra capacity may seem like insurance against volume spikes, the TCO of unused server capacity can have a significant negative impact on a company’s bottom line.

Businesses can avoid many of these server-focused issues by outsourcing their computing, but scaling server capacity up and down to align with demand is still an issue—even with cloud providers. A key benefit of processing in a public cloud is the provider’s ability to seamlessly auto-scale server capacity in real time for its clients, so businesses never need to face the issue of non-performance based on a lack of server capacity–other than the provider’s RAM, CPU and I/O operations limit boundaries, of course.

But cost can still be a challenge because some cloud providers’ tier-limit pricing requires businesses to estimate future usage, with a strong incentive to overcommit to avoid volume levels that exceed their tier. Overcommitting means businesses still face the likelihood of paying for server capacity they’re not using. And even when cloud providers make it relatively easy and cost-effective to ramp capacity up or down in a non-serverless environment, businesses still must be careful of server fleet configuration and management issues, such as determining the number of servers needed, their configuration, how to scale the servers and balance the load, and what to do when servers are idle.

Enter serverless computing—a cloud-based option that combines the benefit of dynamic auto-scaling native to the product right out of the box and a pricing structure based on the actual amount of server capacity businesses use.

Serverless Computing Defined

The first thing to understand about serverless computing is it isn’t actually serverless.

It’s how cloud providers—including AWS, Azure, Google Cloud Platform and others—deploy their servers to deliver backend services on an as-needed basis to their clients by executing a piece of code that dynamically allocates server resources. The code typically runs inside stateless, or non-dedicated, containers and is triggered by events, such as http requests, database events, queuing services, monitoring alerts, file uploads and scheduled events (cron jobs). This shared infrastructure-based approach means users incur fees only for the actual server capacity they use, avoiding the overhead of extra capacity or idle servers, as well as issues associated with tiered pricing. The pricing model is a major differentiator between “standard” cloud computing and serverless.

In a serverless environment, the cloud provider assumes responsibility for 100% of server and infrastructure requirements, including configuration, maintenance, scaling and fault tolerance —giving developers the freedom to build and run applications without the hassle of managing the underlying infrastructure.

This freedom creates the opportunity for “serverless architecture,” an approach to software design that enables developers to focus on writing and deploying code, while their cloud provider provisions servers to run their applications, databases and storage systems at any scale.

If you’re not a developer, you may wonder whether managing server space and infrastructure is a big deal. It is.

Managing servers—along with handling routine tasks, such as security patches, capacity management, load balancing and scaling—eats up time and resources, which can be a challenge for smaller companies because it takes developers away from building and maintaining actual applications. But server and infrastructure management aren’t necessarily easy even for larger businesses. At larger organizations, servers and infrastructure are likely managed by infrastructure teams, eliminating the need for developers to be hands-on in those areas, but coordinating with the infrastructure team still requires developers to take time from writing code.

Pros and Cons of Serverless Architecture

We’ve already established two clear benefits of serverless computing: freeing developers to focus on building business value from the application code they’re creating vs. dealing with server and infrastructure issues and reducing operational costs by paying only for server capacity used.

Let’s dive into additional advantages of serverless:

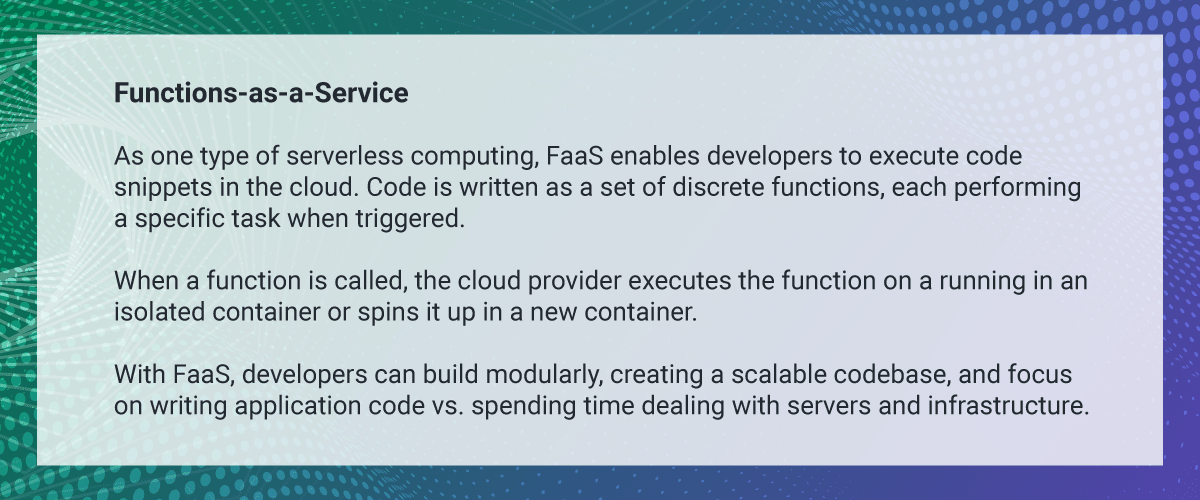

- Simplified backend code. With functions-as-a-service (FaaS), developers can create simple functions that perform independently for a single purpose, such as serving a single API endpoint.

- Quicker turnaround. Serverless architecture can significantly cut time to market. Instead of a complicated deploy process to roll out fixes and new features, developers can add and modify code piecemeal.

- Productivity. The productivity advantage has several elements: (a) because developers can deploy their code without the burden of managing servers or infrastructure, delivery cycles and operational scaling are accelerated, (b) cloud providers offer dozens of serverless development tools and API automation plug-ins to further accelerate developer productivity and (c) serverless presents a lower entry threshold for new developers, who can become productive faster.

- Extensibility. Serverless architectures are easily extensible, so additional functions may be added to address new opportunities.

- Elasticity. Available resources match current demands as closely as possible, provisioning and deprovisioning automatically and incrementally. Think of serverless elasticity as “scalability on steroids.”

- Enhanced stability. Full abstraction over the frequently changing execution environment, so code is more stable and easier to maintain.

- Setup ease. Even at large scale, serverless is easy to set up.

Challenges of Serverless Architecture

Despite significant advantages, it also presents disadvantages of serverless computing, including these:

- Cold start latency. When a serverless function hasn’t been called recently, it’s suspended—saving resources and avoiding over-provisioning. When the suspended function is next called, the cloud provider must restart it (“cold start”)—adding time to the response.

- The latency of a cold start depends on the implementation of the specific cloud provider, as well as the implementation of the function. The estimate for one well-known cloud provider ranges from a few hundred milliseconds to a few seconds, depending on specifics, such as runtime, programming language and function size. Although a challenge, cold start latency is improving as cloud providers become more skilled at optimization.

- Loss of control. This challenge can manifest in several ways, such as changes in pricing or adjustments to SLAs, as well as unexpected limits, loss of functionality and forced API upgrades.

- Testing. Integration testing—evaluating how frontend and backend components interact—is difficult to perform in a serverless environment. In addition, because the serverless infrastructure is highly abstracted, it’s harder to monitor and visualize how applications are running, adding to the challenge of debugging performance and stability issues.

- Vendor control/lock-in. Although it’s theoretically possible to mix and match serverless elements—such as databases, messaging queues and APIs—from different cloud providers, services from a single provider integrate most seamlessly, potentially reducing a business’ options to partner with other providers.

- Health and performance monitoring. It’s important to have real-time visibility into how each function of a serverless application is working because “functions typically travel through a complex web of microservices, and cold starts, misconfigurations and other errors can occur at any node and cause a ripple effect throughout your environment.” It may be necessary to engage a third party to help with this monitoring.

- Ability to DoS yourself by mistake. The serverless environment is elastic and scalable enough to handle almost any unexpected increase in load, but this flexibility presents a potential downside for developers, who must scrupulously avoid introducing code that causes a function to execute itself, for example. In such an environment, serverless could continue to automatically provision additional capacity to meet the errant demand. Fortunately, major cloud providers have a backstop on concurrent executions to mitigate such a situation from getting out of control.

Common with microservices. Additional challenges–not specific to serverless but common in highly distributed systems, including serverless–include:

- Transactionality. Requests typically are processed by a chain of FaaS executions–independent and stateless. So, how do you handle an error somewhere in the middle of an execution chain? How do you roll back the transaction? And, how do you manage distributed transactions across multiple microservices and functions?

- Service discovery. This is the mechanism enabling each service or function to "know about others" (e.g., find its "address," execute, etc.). How does one service know to call the correct version of another service?

- Complicated state management. Serverless functions are stateless, which is good for some apps but not for others. This means developers must find a way to store the application state (e.g., session and operational data) elsewhere.

Serverless vs. Server Architecture

Serverless and server-based architectures are two different approaches to building and deploying applications. Here's a comparison of the two:

Serverless Architecture:

- No server management: Developers focus on writing code without worrying about server provisioning, maintenance, or scaling.

- Pay-per-use: You only pay for the actual execution time and resources consumed by your application.

- Automatic scaling: The cloud provider automatically scales your application based on the incoming requests or events.

- Event-driven: Functions are triggered by events such as HTTP requests, database changes, or queue messages.

- Stateless: Each function execution is independent and stateless, with no shared memory between invocations.

- Reduced operational overhead: No need to manage servers, operating systems, or runtime environments.

- Faster development and deployment: Serverless allows for rapid development and deployment of small, focused functions.

- Examples: AWS Lambda, Google Cloud Functions, Azure Functions.

Server-based Architecture:

- Server management: Developers or operations teams are responsible for provisioning, maintaining, and scaling servers.

- Fixed cost: You pay for the servers regardless of the actual usage, even if they are idle.

- Manual scaling: Scaling requires manual intervention or setting up auto-scaling policies based on metrics.

- Long-running processes: Applications run continuously on servers, handling requests and maintaining state.

- Stateful: Servers can maintain state between requests, allowing for shared memory and persistence.

- More control: You have full control over the server configuration, operating system, and runtime environment.

- Suitable for complex applications: Server-based architectures are suitable for applications with complex business logic and long-running processes.

- Examples: Traditional web servers, application servers, and virtual machines.

Considerations:

- Performance: Serverless can have cold starts (initial delay) for infrequently invoked functions, while server-based architectures provide consistent performance.

- Cost: Serverless can be more cost-effective for applications with intermittent or unpredictable traffic, while server-based architectures may be more cost-effective for steady, high-traffic applications.

- Vendor lock-in: Serverless architectures are often tied to a specific cloud provider's ecosystem, while server-based architectures allow for more flexibility and portability.

- Debugging and testing: Debugging and testing can be more challenging in serverless due to the distributed nature of functions and limited runtime visibility.

Ultimately, the choice between serverless and server-based architectures depends on the specific requirements, scalability needs, cost considerations, and the nature of your application. Many modern applications use a combination of both approaches, leveraging serverless for certain tasks and server-based architectures for others.

Making the Decision: Serverless or Server-based Architecture

So, how does a business decide whether to choose a serverless solution to support its applications?

Like everything in business, it depends on what you want to accomplish.

As a general guideline, however, relatively simple, lightweight applications—particularly those that run at scale—are best suited for serverless. Serverless is also an excellent choice for short-lived tasks and managing workloads with infrequent or unpredictable traffic. On the other hand, serverless is poorly suited for applications that involve a large number of continuous, long-running processes.

Also up for consideration are hybrid options, because the decision to go serverless isn’t necessarily an either/or choice. A hybrid solution may be the answer in some situations—deploying serverless only where it offers the greatest benefits.

Based on our experience, the applications and industries with the greatest obvious potential for adopting serverless include:

- POCs, MVPs and startups. Serverless facilitates a quick start and fast time to market. It’s also a great solution from a cost perspective because of relatively low volumes in early days, interspersed with traffic spikes relating, for example, to demonstrations and promotional activities.

- Events. Platforms involving multiple devices requesting access to a range of file types benefit, including ticketing because of the ramp-up and ramp-down in activity.

- Multimedia processing. The massive quantity of data involved in multimedia processing requires significant computing resources. Serverless provides exceptional scalability and load flexibility in these scenarios.

- Live video broadcasting. Serverless enables collection of audio and video streams from multiple sources, which are subsequently synthesized and presented to viewers in a single view.

- Environments with fluctuating demand. Many platforms experience significant fluctuations in demand. A serverless environment allows for massive shifts in traffic volume without the need for platform owners to invest in additional in-house computing resources to handle the load.

This list, of course, isn’t comprehensive. We encourage you to read “Serverless Use Cases,” above, for more ideas on the types of requirements that serverless could address with significant advantage.

Need help deciding whether serverless is right for your applications?

If you’re intrigued by the benefits of serverless, we’re happy to collaborate with you to evaluate whether serverless is the right approach for your business application. And if you need implementation support, call on the resources of our expert team. Contact us!