Kafka Consulting Services Built on Deep Distributed Systems Experience

Apache Kafka powers mission-critical data infrastructure for the world's most demanding applications. With over a decade of experience building scalable, fault-tolerant systems for fintech payment processing, real-time ticketing platforms, and high-volume event streaming, we bring the architectural insight and hands-on expertise to help you implement Kafka successfully—whether you're migrating from legacy messaging systems, optimizing existing clusters, or building event-driven architectures from scratch.

Why Choose Softjourn for Apache Kafka Consulting

Handle millions of messages per second with architectures proven in production at payment processors, ticketing platforms, and real-time analytics systems. We design Kafka clusters that grow with your business, from thousands to millions of events without performance degradation or architectural rewrites.

Reduce latency, eliminate bottlenecks, and maximize throughput with tuning strategies developed across hundreds of production deployments. We analyze partition strategies, replication factors, compression algorithms, and consumer group configurations to ensure your Kafka infrastructure performs at peak efficiency.

Build fault-tolerant event streaming platforms with proper replication, disaster recovery, and monitoring. Our architects implement multi-datacenter strategies, automated failover mechanisms, and comprehensive observability to ensure your critical data pipelines maintain 99.99% uptime even during infrastructure failures.

Connect Kafka to your existing technology stack-databases, APIs, cloud services, and legacy systems. With deep experience in Kafka Connect, custom connectors, and streaming data pipelines, we ensure reliable data flow between Kafka and your PostgreSQL, MongoDB, Elasticsearch, Salesforce, AWS, Azure, and legacy applications.

Design event-driven architectures that eliminate tight coupling and enable true microservices independence. Our solutions architects evaluate your data flows, domain boundaries, and scaling requirements to create Kafka topic strategies, schema designs, and streaming patterns that support business agility and technical evolution.

Transition from RabbitMQ, ActiveMQ, IBM MQ, or monolithic databases to Kafka without service disruption. We create detailed migration roadmaps with parallel running strategies, data validation frameworks, and rollback procedures to modernize your messaging infrastructure while maintaining business continuity throughout the transition.

Ready to discuss your Kafka architecture or optimization case?

Apache Kafka Consulting Services

From initial architecture design through production optimization and ongoing support, we provide comprehensive Kafka consulting services for organizations building event-driven systems. Our team brings 10+ years of distributed systems experience, having implemented high-volume streaming platforms for fintech payment processing, real-time ticketing inventory, and data-intensive applications requiring sub-second latency at scale.

Why Apache Kafka?

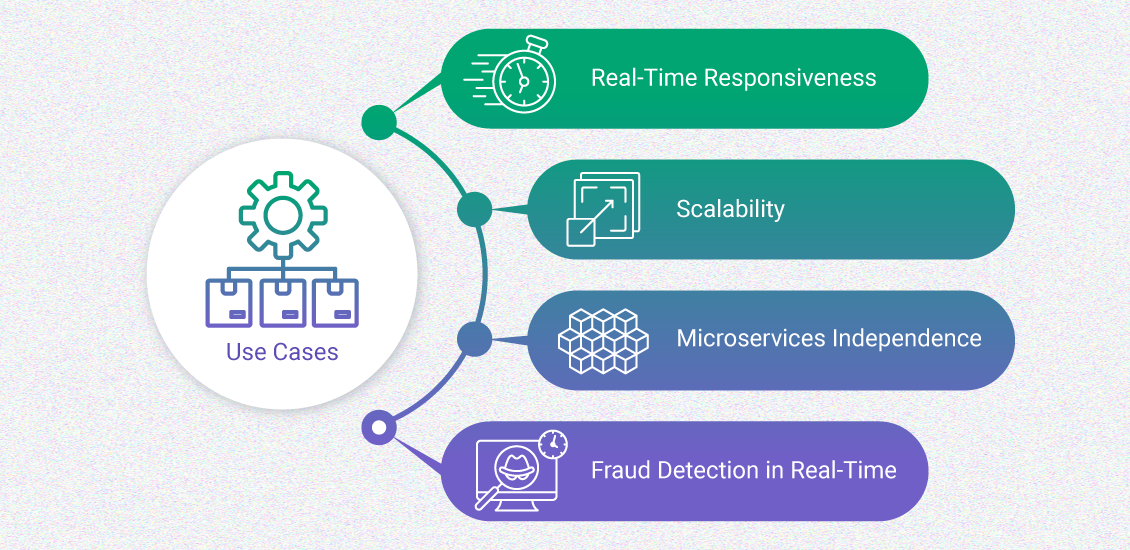

Modern applications demand real-time responsiveness, but traditional point-to-point integrations and batch processing create brittle systems that can't scale. Apache Kafka decouples data producers from consumers, enabling true microservices independence while maintaining millisecond-level data freshness.

In fintech, this means fraud detection systems analyzing payment streams in real-time. For ticketing platforms, it means inventory updates propagating instantly across channels. For any data-intensive business, it means building systems that grow with demand rather than collapsing under it.

Our Kafka Consulting Approach

Strategic foundation for scalable event streaming. We evaluate your current infrastructure, data flows, and business requirements to design Kafka architectures that support both immediate needs and future growth. Our assessments cover topic design strategies, partition distribution, replication requirements, security models, and integration patterns. You receive detailed architecture diagrams, implementation roadmaps, and capacity planning guidance.

Production-ready cluster deployment. Our engineers provision Kafka clusters on your infrastructure—whether on-premises, AWS, Azure, or hybrid environments. We configure brokers, implement monitoring and alerting, set up schema registries, establish security protocols, and deploy Kafka Connect for data integration. Every implementation includes comprehensive documentation, runbooks, and knowledge transfer sessions for your team.

Maximize throughput and minimize latency. We analyze producer configurations, consumer group patterns, broker resource utilization, and network throughput to eliminate bottlenecks. Our optimization work includes partition rebalancing, compression strategy evaluation, batching configuration, and memory management tuning. Most engagements achieve 40-60% performance improvements through systematic analysis and targeted adjustments.

Risk-free transitions from legacy messaging. We create detailed migration plans with parallel running strategies, allowing you to validate Kafka behavior before decommissioning existing systems. Our engineers develop data synchronization mechanisms, implement dual-write patterns, create comprehensive testing frameworks, and establish rollback procedures. Migrations complete with zero message loss and minimal service disruption.

Seamless data pipeline integration. We implement Kafka Connect frameworks and develop custom connectors for systems without existing integration options. Whether connecting to proprietary databases, legacy mainframes, or specialized SaaS platforms, we build reliable, performant connectors that maintain data consistency and handle failure scenarios gracefully. All connectors include monitoring, error handling, and documentation.

Empower your team with Kafka expertise. We provide hands-on training covering Kafka fundamentals, operations, troubleshooting, and best practices. Training programs include architecture workshops, operational runbooks, performance tuning guides, and disaster recovery procedures. Your team gains the confidence to operate and evolve Kafka infrastructure independently while knowing expert support remains available when needed.

With over a decade architecting event streaming platforms for data-intensive businesses, we bring deep domain knowledge of how Apache Kafka solves real-world challenges in fintech, ticketing, and media industries. Our experience spans payment processing systems handling millions of transactions daily, ticketing platforms managing real-time inventory across channels, and streaming media architectures delivering content to global audiences.

Real-time payment processing, fraud detection, transaction monitoring, and financial data aggregation require event streaming platforms that maintain strict ordering guarantees, support exactly-once semantics, and handle regulatory compliance. We architect Kafka solutions for payment processors, digital banks, and fintech platforms processing millions of transactions with sub-second latency. All financial services.

Ticketing platforms face extreme traffic spikes, real-time inventory synchronization across channels, and zero-tolerance for overselling. Kafka enables event-driven architectures that propagate inventory changes instantly, handle flash sales without system collapse, and maintain consistency across mobile apps, web platforms, and box office systems simultaneously. All ticketing services.

Streaming media platforms, content delivery networks, and viewer analytics systems demand high-throughput event processing for real-time recommendations, ad insertion, and audience engagement tracking. We build Kafka architectures that handle millions of viewer events, coordinate content delivery across regions, and power personalization engines with millisecond data freshness. All media and entertainment services.

Engagement Models

Need expert guidance on your Kafka architecture or performance challenges?

Kafka-Powered Solutions in Production:

Our Kafka Consulting Process

Our Technology Stack

Building successful Kafka implementations requires deep expertise across distributed systems, streaming architectures, and the broader technology ecosystem. With 10+ years architecting scalable platforms for fintech payment processing and real-time ticketing systems, we bring comprehensive knowledge of the tools, frameworks, and integration patterns that make event streaming platforms succeed in production. Our experience spans cloud platforms, programming languages, databases, monitoring tools, and the complete Kafka ecosystem.

From initial architecture through production optimization, we leverage our technology depth to design solutions that integrate seamlessly with your existing infrastructure while positioning you for future innovation.

Client Testimonials

Tacit Corporation chose Softjourn as their technology partner, impressed by our technical expertise and direct approach. Brenda Crainic, CTO of Tacit, highlighted, "We grew a lot as a company over the last 12 years and our processes changed, many of the current development practices being initiated by the team. I count a lot of my team’s expertise and I am confident in our ability to deliver cutting-edge technology for our clients.

Our team’s dedication to understanding Tacit's needs has been instrumental in enhancing their platform’s capabilities, ensuring robust custom software development solutions. This ongoing collaboration underscores our commitment to delivering high-quality, innovative services that support our clients' visions." - Brenda Crainic, CTO and Co-Founder of Tacit

Frequently Asked Questions

Kafka is fundamentally a distributed event streaming platform rather than a traditional message queue, which changes how you think about data architecture. Unlike RabbitMQ or ActiveMQ which focus on point-to-point message delivery and consumption, Kafka persists all events to disk and allows multiple independent consumers to read the same event stream at their own pace.

Key architectural differences:

- Persistence: Kafka retains all messages for a configurable period (days, weeks, or indefinitely), enabling event replay and new consumer applications to process historical data

- Scalability: Kafka horizontally scales to handle millions of messages per second through partitioning, while traditional message queues typically scale vertically with limitations

- Multiple consumers: Different applications can consume the same event stream independently without affecting each other—critical for event-driven microservices architectures

- Ordering guarantees: Kafka maintains strict ordering within partitions, essential for financial transactions, inventory updates, and event sourcing patterns

When to choose Kafka: High-throughput event streaming, microservices communication, event sourcing, real-time analytics, log aggregation, and building event-driven architectures.

When traditional queues suffice: Simple request-response patterns, low-volume messaging, or scenarios where immediate message deletion after consumption is required.`

Kafka consulting costs vary significantly based on project scope, complexity, and engagement model. Here's what influences pricing:

Project complexity factors:

- Cluster size and message volumes (thousands vs millions of messages/second)

- Number of topics, partitions, and consumer groups

- Integration requirements (how many systems connect to Kafka)

- Security and compliance requirements (encryption, access controls, audit logging)

- High availability needs (multi-datacenter, disaster recovery)

- Migration complexity (parallel running, data validation, rollback procedures)

Typical engagement ranges:

- Architecture assessment: 1-3 weeks for comprehensive evaluation and design recommendations

- Implementation projects: 4-12 weeks depending on cluster complexity and integrations

- Migration projects: 8-16 weeks including parallel running, validation, and cutover

- Performance optimization: 2-6 weeks depending on investigation depth and changes required

- Ongoing managed services: Monthly retainer based on cluster size and support level

Cost optimization strategies: Start with architecture assessment to validate approach and identify potential issues early. Use dedicated team model for long-term projects to reduce hourly rates. Consider phased implementations to spread costs and validate ROI incrementally.

Get accurate pricing: Schedule a consultation to discuss your specific requirements, data volumes, and timeline. We'll provide transparent pricing options tailored to your needs.

Yes, we specialize in risk-free migrations from legacy messaging systems to Kafka. Our migration approach ensures business continuity while modernizing your event streaming infrastructure.

Migration methodology:

- Assessment phase: We analyze your existing message flows, peak loads, retry patterns, and consumer behaviors to design equivalent Kafka architecture that maintains or improves current functionality.

- Parallel running strategy: Rather than risky "big bang" migrations, we implement both systems simultaneously with message duplication and comprehensive validation. Your existing system continues serving production while Kafka proves itself.

- Gradual consumer migration: We move consumers to Kafka incrementally—starting with non-critical consumers, validating behavior, then progressively migrating mission-critical applications with rollback procedures at each step.

- Data validation framework: Custom validation tools continuously compare message delivery between systems, identifying any discrepancies before they impact production operations.

- Performance verification: Load testing confirms Kafka meets or exceeds current system performance across all scenarios including peak loads and failure conditions.

- Safe decommissioning: Only after extensive validation and stakeholder confidence do we decommission legacy systems, with rollback procedures remaining available during the transition period.

Timeline: Typical migrations complete in 8-16 weeks depending on system complexity. Zero-downtime cutover ensures business operations continue uninterrupted throughout the transition.

We work with all Kafka deployment models—self-hosted open source clusters, managed cloud services (AWS MSK, Azure Event Hubs, Confluent Cloud), and hybrid architectures combining both approaches.

Managed service expertise:

- AWS MSK (Managed Streaming for Apache Kafka): Cluster configuration, VPC integration, IAM security, monitoring with CloudWatch, Connect integration, and cost optimization

- Confluent Cloud: Schema Registry implementation, ksqlDB development, Kafka Connect management, cluster linking, and enterprise feature utilization

- Azure Event Hubs: Kafka protocol implementation, integration with Azure services, authentication configuration, and migration strategies

Self-hosted deployments: Complete infrastructure provisioning on AWS, Azure, GCP, or on-premises data centers. We handle installation, configuration, monitoring setup, security hardening, backup procedures, and operational runbooks.

Deployment recommendation factors:

- Managed services: Faster time-to-value, reduced operational overhead, automatic updates, ideal for teams without deep Kafka operations expertise

- Self-hosted: Greater control, potential cost savings at scale, specific compliance requirements, need for customizations unavailable in managed offerings

Hybrid architectures: Many organizations use managed services for development/staging environments while running self-hosted production clusters for cost optimization. We design architectures that work across deployment models.

Our recommendation: During architecture assessment, we evaluate your team capabilities, scale requirements, compliance needs, and budget to recommend optimal deployment approach for your specific situation.

Scalability planning is fundamental to every Kafka architecture we design. Rather than building for today's needs and hoping it scales, we engineer for growth from day one.

Scalability strategies we implement:

- Proper partitioning strategy: We design topic partition counts based on current and projected throughput, ensuring horizontal scaling capability. Under-partitioned topics create bottlenecks; over-partitioned topics waste resources.

- Consumer group architecture: Properly structured consumer groups allow adding consumers to increase processing capacity without code changes or system downtime.

- Broker cluster sizing: We calculate broker capacity based on message rates, retention periods, replication factors, and headroom for traffic spikes—typically designing for 2-3x current peak loads.

- Monitoring and capacity planning: Comprehensive metrics collection tracks partition lag, disk utilization, network throughput, and CPU usage. Proactive alerting identifies capacity constraints before they impact performance.

- Future-proof topic design: Topic naming conventions, schema evolution strategies, and data models accommodate new use cases without requiring costly restructuring.

Growth planning: Our architecture documents include capacity models showing exactly when additional brokers or partitions become necessary based on traffic growth projections. You'll know scaling triggers months in advance.

Proven at scale: Our fintech and ticketing implementations handle millions of messages per second with designed-in scaling paths. When you do need to scale, it's a planned, tested procedure rather than an emergency response.

Validation: Load testing proves architecture scales as designed before production deployment, eliminating scaling surprises.

We offer flexible ongoing support ranging from ad-hoc consultation to comprehensive managed services, depending on your team's needs and Kafka operations maturity.

Support options:

Managed services: We operate your Kafka infrastructure as an extension of your team—handling monitoring, incident response, performance tuning, capacity planning, upgrades, and optimization. You focus on building applications while we ensure the platform remains reliable and performant.

Retained advisory: Monthly retainer provides access to senior Kafka consultants for architecture guidance, troubleshooting assistance, performance reviews, and strategic planning. Your team handles day-to-day operations with expert support available when needed.

On-demand consultation: Purchase consulting hours as needed for specific challenges, optimization projects, or upgrade assistance. No ongoing commitment—pay only for services you use.

Included with implementation: All implementation projects include post-launch support period (typically 30-90 days) where we remain available for questions, troubleshooting, and optimization guidance as you stabilize production operations.

Knowledge transfer: Every engagement includes comprehensive documentation, operational runbooks, troubleshooting guides, and training sessions designed to make your team self-sufficient. Our goal is empowerment, not dependency.

24/7 emergency support: Available for mission-critical production systems where downtime has significant business impact. Rapid-response team handles critical incidents outside business hours.

What most clients choose: Many organizations start with managed services during initial implementation, transition to retained advisory as their team develops Kafka expertise, then move to on-demand consultation for specialized needs. This progression path lets you optimize support costs as internal capabilities mature.